This is not about any specific case. It’s just a theoretical scenario that popped into my mind.

For context, in many places is required to label AI generated content as such, in other places is not required but it is considered good etiquette.

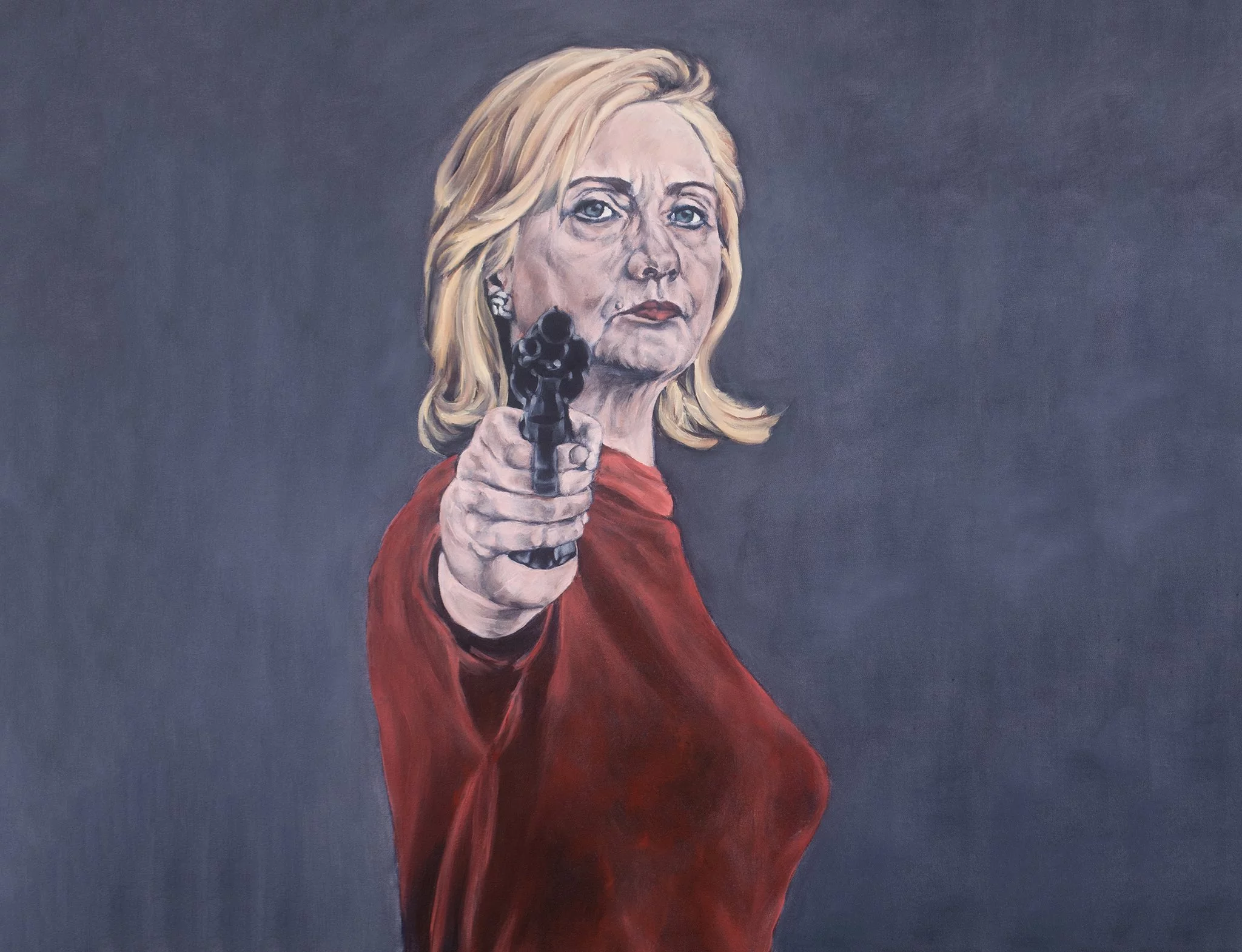

But imagine the following, an artist is going to make an image. Normal first step is search for references online, and then do the drawing taking reference from those. But this artists cannot found proper references online or maybe the artist want to experiment, and the artist decide to use a diffusion model to generate a bunch of AI images for reference. Then the artist procedes to draw the image taking the AI images as references.

The picture is 100% handmade, each line was manually drawn. But AI was used in the process of making this image. Should it have some kind of “AI warning label”?

What do you think?

I think it should be marked under the same conditions in which you would credit another artist on whose drawings your work is based on. Is it a close reproduction of an AI-generated piece? You should mark it as AI-assisted. Did you use AI-generated content as general inspiration to create your own unique artwork? No need to mark it in that case.

No. Look, it’s reference; as long as it’s reference and not a study or copy, no one should care. In the same way that if you pose a 3D model that someone else made, rendered it out, and used that as reference, you don’t have to mention it.

A case like that doesn’t concern me much. If AI has a place in art, it’s as a tool like that.

To me, pardon the term, but hysteria about AI generated content as some sort of cooties that taints whatever it touches is silly.

AI content generally not art because there is not the same intentionality behind it in an ontological sense, in the same way pretty patterns that naturally occur are not art. You can be inspired by whatever, I’d just call you a shit artist if you trace some AI content and call it your art.

You could argue that it is possible to be art in the same way that guided natural processes can be art, using tools does not immediately make something not art, but looking like art also does not necessarily make something art – it is an interplay between an artisan and their tools to shape the world with intentionality. I think its just a higher bar to clear with tools that could possibly make some of the “creative decisions”.

No, though I think the right thing for the artist would be to disclose that the references were ai.

Artists draw from reference all the time, regardless of whether the references are random google image search results, or photos they have taken themselves. Generally we have never expected artists to share exactly what references were used, because it’s simply part of the drawing process.

If those references happen to be AI, what does that change?

It just gives the audience a clearer picture of the artist’s process. I’m not saying artists (and btw I’m an artist) need to disclose every piece of reference they use for everything, every time. However many artists do share their process, and the way in which reference is used can vary greatly. Many artists use reference to practically copy the subject, others just use reference to understand the subject and then create something completely different. Or in a completely different pose.

As someone consuming art, I would appreciate knowing what type of mastery and skills the artist has- did they envision this in their minds? How much? What inspired them? Is this an accident or deliberate? Etc. This may be irrelevant to some people, but many at some point want to understand how the artist thinks and feels.

Before AI it would have been obvious that if the subject is not realistic and not found elsewhere then it has to be the artist’s imagination, or an accident. Now an artist could be copying AI instead and you would never know.

I find that artists that tend to copy their references with high fidelity (such as many wildlife painters or illustrators, or personal portraits) are also among those disclosing their references the most. This makes the audience appreciate the artists’ skills more. You can see the difference between the original and the result whereas you would otherwise have to guess.

Thanks for the explanation, makes sense.

This is like in the 00s when every comic book artist was obviously using 3D modeling software but none of them would admit to it. You can’t expect artists to be honest about this sort of stuff because they’d just be putting themselves at a disadvantage to the ones who lie about it. Furthermore, it doesn’t really matter, does it

deleted by creator

Yes. And photos should also have a disclaimer about what kinds of edits were done, regardless if they were done manually or by AI.

I keep seeing stupid ideas in social media because people believe edited photos match reality.

Most places I’m aware of will mention if AI is used in the creation of some product, whether it is directly displayed in the end result or not